Collaborative Music Live Coding

This blog post is a follow-up summary of my lightning talk “Challenges and Opportunities of Collaborative Music Live Coding: A Practitioner’s Approach” presented at Café 1001 in London during in the event The RAW, Inter/sections 2018: “The RAW focusses on Live Coding and code based approaches to the real time creation of sound and image. For those yet to make its acquaintance, Live Coding is the creation of sonic or visual content generated through the execution of computer code in real time”.

CSCM, Network Music and CMLC

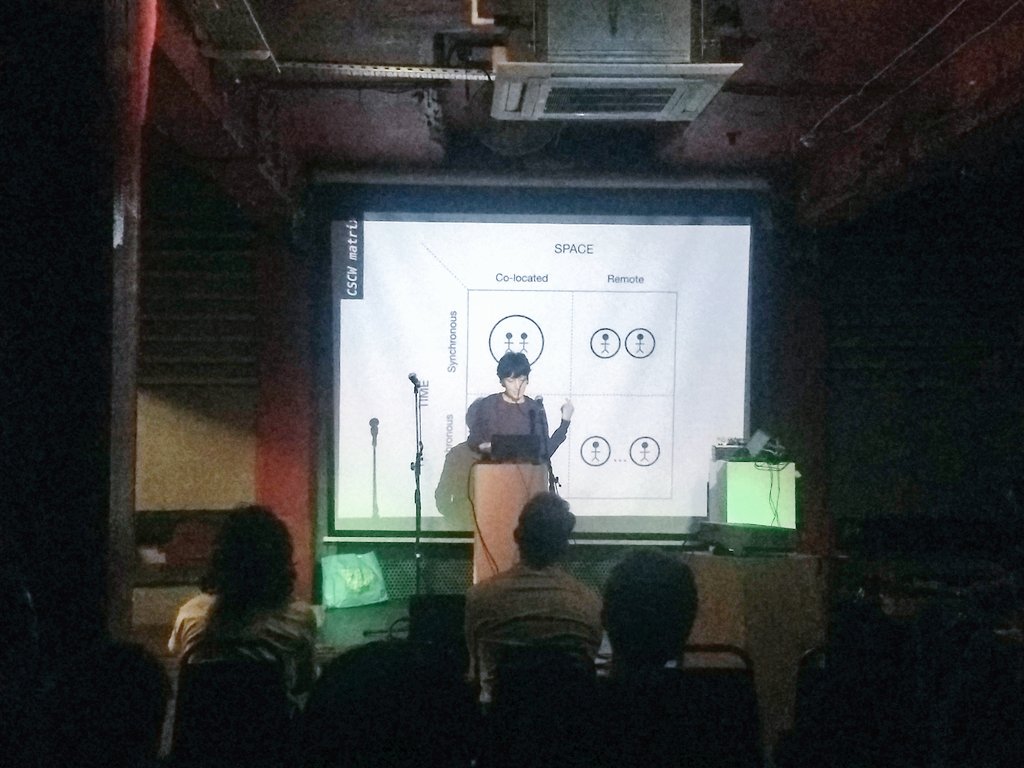

When discussing about CMLC, I always like to refer to the context of collaborative music live coding (CMLC) drawing from the areas of computer-supported collaborative work (CSCW) and the CSCW time/space matrix that Álvaro Barbosa adapted to computer-supported collaborative music (CSCM) (Figure 1). This matrix shows that collaboration can take many forms, and that time and space are two important factors on the nature of computer-supported collaboration. Typically, CSCM activities happen simultaneously either in co-located settings (musicians situated in the same location) or remote settings (musicians distributed in different locations). It is also possible to find instances of asynchronous co-located CSCM, as highlighted in our research on exploring social interaction with the Reactable in a science museum (Xambó et al. 2017).

Another important reference is network music (from John Cage and Stockhausen to the League of Automatic Composers/The Hub, the ensemble powerbooks_unplugged, SlOrk, and women’s networks in CSCM such as FLO and OFFAL). Particularly, the term “musical networks” refers to either local or remote musicians and computers connected by a network, independent of the musicians’ locations. It is beyond the scope of this blog post to describe the characteristics of musical networks (cf. Xambó 2015), but it is worth mentioning that interdependency is a key characteristic, which refers to the capacity of musicians to influence, share, and shape each other’s music in real time.

CMLC is commonly found in performance. Typically, simultaneous interaction can emerge in co-located and/or remote groups, which can have from a small to a large size of participants. Another interesting application of CMLC is education. When I was at the Georgia Center for Music Technology (GTCM) in Georgia Tech, we conducted a number of studies looking at different configurations of CMLC using EarSketch applied to education (leading to 5 publications between 2016-2017). We looked at duo live coding, trio live coding, and multiple live coding. We also explored the mechanism of turn-taking. The results indicated that trio live coding can be interesting in a classroom setting because there are more roles involved in the collaboration if compared to pair live coding. However, this mechanism can be tedious and slow, which would negatively affect a live performance.

We also speculated about future scenarios, envisioning a virtual agent that can help students to improve their programming and musical skills, and can help musicians to exploit computational creativity applied to music.

Where Are the Boundaries of CMLC?

CMLC has interesting opportunities within and beyond co-located synchronous group interaction. CMLC is a suitable space for democratic, uncentralized music. Next, I would like to number a few examples from personal experience that represent how collaboration can take multiple shapes within and beyond co-located, simultaneous collaborative music live coding: workshops on network music, crowdsourced content used in live coding, concerts/algoraves, and collaborative albums of live coding music.

CMLC: Workshops

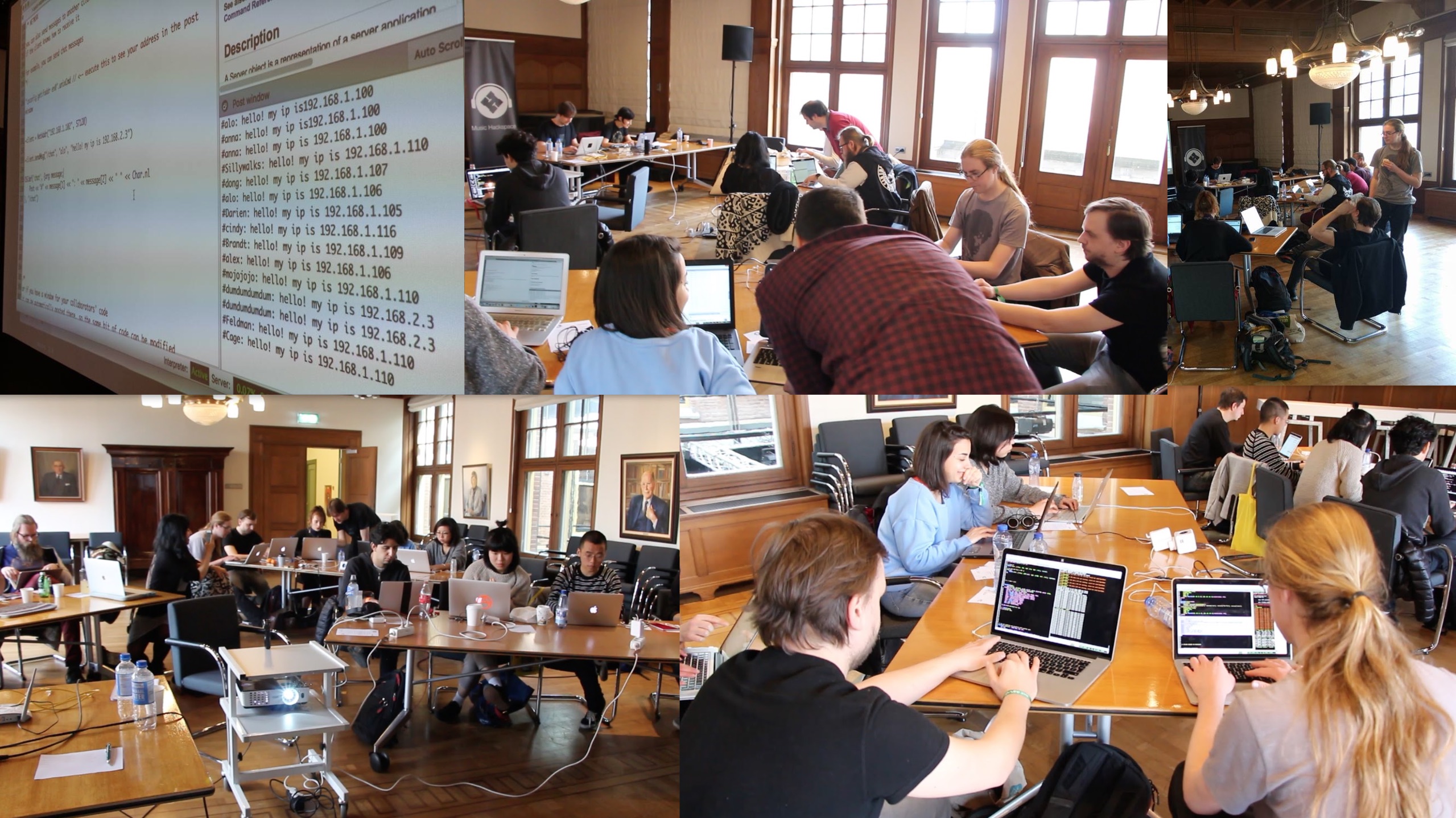

In 2018, Alo Allik and I led the 2-day workshop “Collaborative Network Music Workshop” (organized by Music Hackspace and funded by Rewire). As shown in Figure 2, the workshop led to descentralized music participation in a context of peer-learning. The workshop was designed to understand the main concepts in collaborative network music and core building blocks in SuperCollider. The impromptu improvisations emerged from the sessions and distantly reminded the historical ensemble powerbooks_unplugged.

CMLC: Crowdsourced Content

MIRLCRep is a self-built library for SuperCollider that looks into real-time music information retrieval (MIR) applied to live coding using crowdsourced databases (in particular sounds from the online database Freesound.org), as well as local databases (Xambó et al. 2018). This library allows for live repurposing of Creative Commons licensed sounds, which illustrates an asynchronous form of CMLC. The username of the retrieved sound is printed on the screen together with the title of the audio sample. A list of credits is stored in the computer for future reference.

CMLC: Concerts & Algoraves

The concerts that are centered around live coding or algorave, typically with a line-up of live coders, can also be considered as an instance of CMLC. I have been involved in the co-organization of a number of this kind of events:

- Live Coding Sessions I (2012) @ Niu, Barcelona on 14 March 2012. An evening of multivariate live coding performances with Graham Coleman, Pulso, Miquel Parera. Organized by Gerard Roma and Anna Xambó.

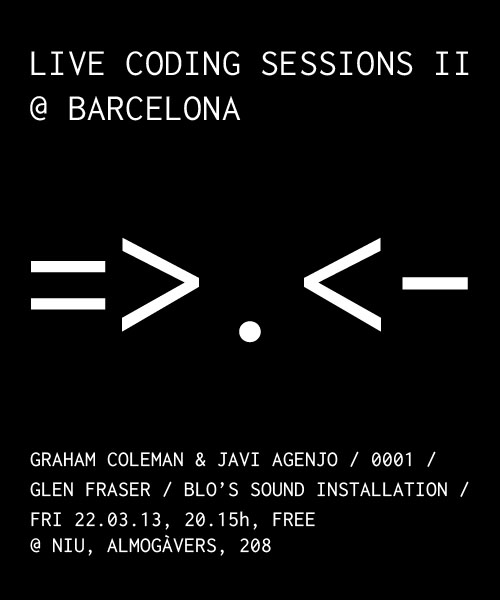

- Live Coding Sessions II (2013) @ Niu, Barcelona on 22 March 2013. Live coders: Graham Coleman & Javi Agenjo, 0001, Glen Fraser, Blo’s sound installation (Figure 4). Organized by Gerard Roma and Anna Xambó.

- Perspectives on multichannel live coding (2013) @ Sala Polivalent, UPF. Co-organized with Phonos and Gerard Roma, with the help of Anna Xambó. A multichannel live coding concert with 16 speakers on 4 October 2013. Different approaches to multichannel improvisation with code were presented: Graham Coleman (remote), Alex McLean (remote), Miquel Parera, Gerard Roma, Anna Xambó. The TopLab logo was modified to represent multiplicity (Figure 5).

And, of course, the Algorave “the RAW”, organized by Inter/sections, is yet another instance of this kind of collaborations: in this case, the combination of live coders with visualists that we have not met before, where the sum of the different performers creates a musical network based on asynchronous co-located collaboration.

CMLC: Albums

A natural follow-up from these thematic concerts on live coding is to produce a music album. We have explored this route with the online music netlabel that I have co-founded together with Gerard Roma: Carpal Tunnel. We have released Noiselets, a live album from a homonymous concert that we curated with 7 musicians, including us: scmute, peterMann, Martin Hug, Faraldo, 0001, Miquel Parera, Urge to Kill. It has been a collaborative experience where we have shared the whole album making process, from mastering, to design, to promotion (Figure 6).

Compilation albums of live coding are another instance of CMLC. For example, of great influence is the seminal music album sc140 (2009), an album curated by Dan Stowell that presents 22 pieces created with only 140 characters of code.

Open questions / Future work

What are the potential future areas of research of CMLC? From the above discussion and research, I highlight next a series of themes that can be interesting to explore:

- Virtual agents (VA) and collaborations between VA and humans (HA), as well as VA-VA.

- Mobile / participatory music and LC, how would it look like? e.g. Michigan Mobile Phone Ensemble (Essl 2010).

- Collaborations between visualists and live coders: what to prioritize?

- Live coding and tangibles: Can we create live coding using a LittleBits style of physical interaction?

- Network music with other instruments (not only live coders with laptops): how would it look like?

- Live coding and education: bringing not only live coding but CMLC into the classroom can help towards exploring alternative ways of music education and innovation in music creation.

Algorave

The day was rounded off with an Algorave involving Renick Bell, Joanne Armitage, 0001, Alo Allik, Lizzie Wilson, Tsun Winston Yeung, Type, or peterMann, among others. I contributed with some of the above ideas on crowdsourced live coding using the self-built library MIRLC. For those interested, there will be a follow-up performance early next year in the next International Conference on Live Coding (ICLC 2019) at Media Prado, Madrid. Stay tuned!

Acknowledgments

Thanks to the organizers of the Inter/sections 2018 event, especially Jon Pigrem, Lizzie Wilson, Andy Thompson, Sophie Skach, Mathieu Barthet and James Weaver.

References

- Barbosa, A. (2006) “Displaced Soundscapes”. Computer-Supported Cooperative Work for Music Applications (2006).

- Essl, G. (2010) “The Mobile Phone Ensemble as Classroom.” Proceedings of International Computer Music Conference. 2010.

- Xambó, A. (2015). “Tabletop Tangible Interfaces for Music Performance: Design and Evaluation”. PhD thesis. The Open University.

- Xambó, A., Freeman, J., Magerko, B., Shah, P. (2016). “Challenges and New Directions for Collaborative Live Coding in the Classroom”. In International Conference of Live Interfaces (ICLI 2016). Brighton, UK.

- Xambó, A., Hornecker, E., Marshall, P., Jordà, S., Dobbyn, C. and Laney, R. (2017). “Exploring Social Interaction with a Tangible Music Interface”. Interacting with Computers, 29:2, pp. 248-270.

- Xambó, A., Roma, G., Lerch, A., Barthet, M., Fakekas, G. (2018) “Live Repurposing of Sounds: MIR Explorations with Personal and Crowdsourced Databases”. In Proceedings of the New Interfaces for Musical Expression (NIME ’18). Blacksburg, Virginia, USA. pp. 364-369.

- Xambó, A., Roma, G., Shah, P., Freeman, J., Magerko, B. (2017) “Computational Challenges of Co-creation in Collaborative Music Live Coding: An Outline”. 2017 Co-Creation Workshop at the International Conference on Computational Creativity. Atlanta, GA, USA.

- Xambó, A., Roma, G., Shah, P., Tsuchiya, T., Freeman, J. and Magerko, B. (2018). “Turn-taking and Online Chatting in Co-located and Remote Collaborative Music Live Coding”. Journal of Audio Engineering Society, 66(4), pp. 253–256.

- Xambó, A., Shah, P., Roma, G., Freeman, J., Magerko, B. (2017) “Turn-taking and Chatting in Collaborative Music Live Coding”. In Proceedings of the Audio Mostly 2017 Conference. London.

Note: The ideas of this text were presented as a lightning talk at The RAW, Inter/sections 2018, held at Café 1001, London, UK, on September 28, 2018.