How to Represent the Umami Taste in Digital Performance?

This blog post is inspired by a conversation with Thor Magnusson during his visit at Queen Mary University of London last week. We talked about his AHRC funded project Sonic Writing and the challenge of score representations for digital performance. It is a difficult endeavor to accurately represent in a timeline the act of a digital performance. Three examples of my latest collaborative work and practice in music technology show our attempts to represent the structure and content of our music tech pieces. In the rest of this blog post, I argue the reasons why we have not been successful yet, and the open questions that emerge toward finding a suitable anti-score representation of these new artistic forms.

1. Beacon

Beacon is an audiovisual immersive piece by Anna Weisling (visualist) and Anna Xambó (electronic musician). The piece has been performed at the NIME 2017 conference (Stengade, Copenhagen, Denmark) and Root Signals Festival 2017 (Georgia Southern University, Statesboro, Georgia, United States).

The visualist generates visuals with the Distaff system, a DIY modified Technics turntable that communicates with a MaxMSP patch. The sound of the visualist’s fingertips and nails interacting with the mechanical Distaff (e.g., scratching, pressing) are captured with a lavalier mic. This audio input signal is treated by the electronic musician, using a customized library in SuperCollider, as either pure audio, audio signal, or control signal depending on the passages of the piece.

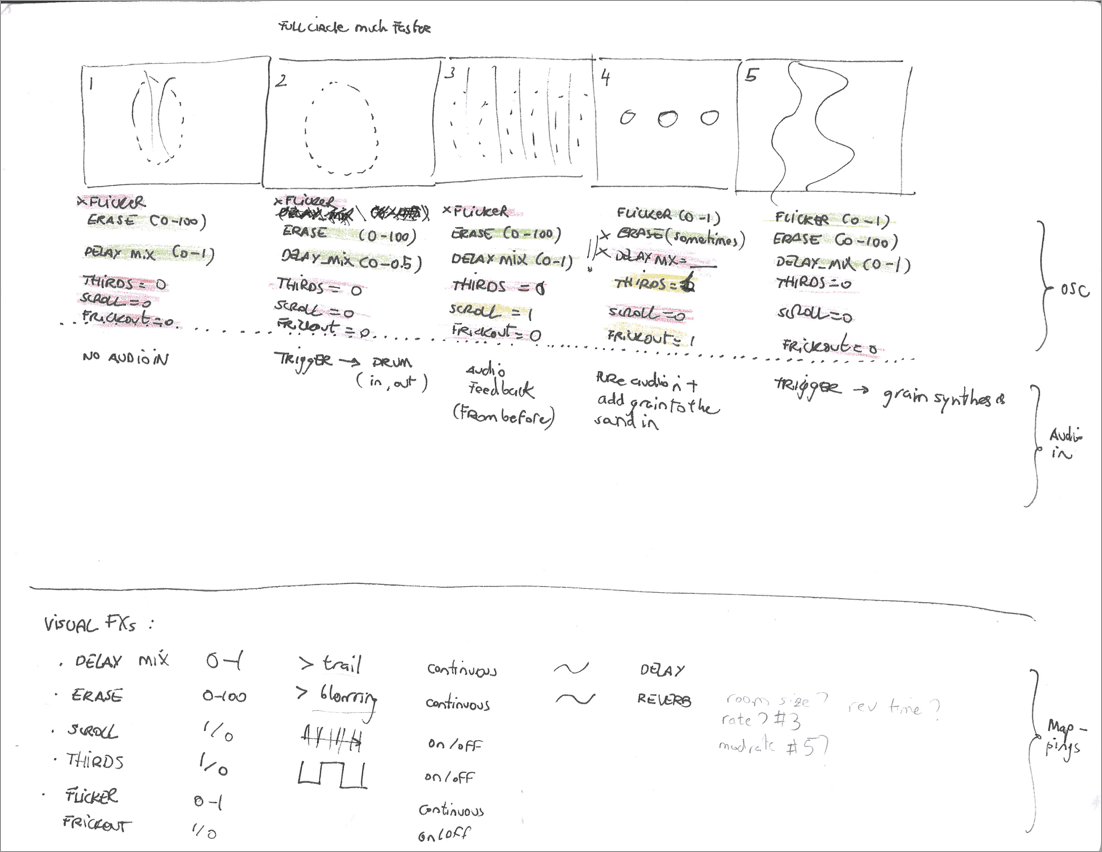

As shown in Figure 2, we structure the relationship between the visuals and the sound on a timeline of four episodes. For each section, we: (1) illustrate the overall visual mood; (2) list the parameters and range of values used by the visual engine; (3) define the overall sound mood (i.e., how the audio input signal is treated); and (4) outline the mappings of the visual engine including the name of the parameter, range of values, and visual effect.

In this example, we have worked with the following factors:

- time relative to the piece and also an absolute time for each part;

- mappings that refer to parameters and ranges of values. This includes visual mappings from the physical device to the code, sound mappings from the incoming audio to the sound output, and AV mappings from the code for visuals to the code for sound;

- visual features with a visual drawing that represents in a snapshot the main visual characteristics of each section; and

- sound features with the main aural characteristics for each section.

We have used this “score” for rehearsal and final performance, yet it has been used just as a guiding tool. This helped us to understand the AV elements in each section. Every rehearsal and performance has been different due to the duration of each episode, the nature of the incoming audio signal, the performance venue constraints (e.g. multichannel vs stereo), and so on.

This approach reminds a state diagram with the difference of presence vs absence of time information. In future “score” iterations, we can consider including more abstractions from our respective code in MaxMSP and SuperCollider. As an ongoing project, we are currently investigating mutual interaction. How to visually represent our collaboration as composition, performance and AV relationship is still an open question, and part of our research.

2. Transmusicking I

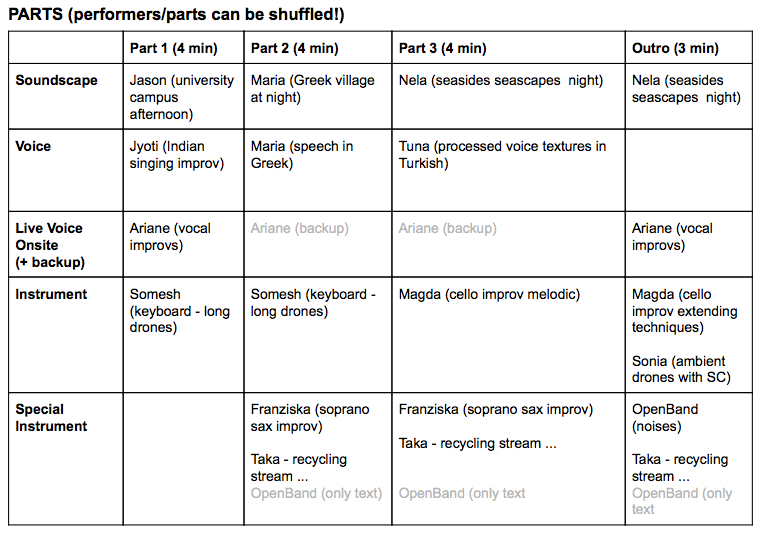

Transmusicking I explores geographical, cultural, technical and artistic challenges of collaborative music making, with musicians distributed globally who use multiple tools to create music. The piece has been performed at the Audio Mostly 2017 conference (Oxford House Theatre. London, UK), featured by:

- FLO: Nela Brown (HR), Magdalena Chudy (PL), Maria Papadomanolaki (GR), Sonia Wilkie (AU), Ariane Stolfi (BR), Tuna Pase (TR), Franziska Schroeder (DE), and

- WiMT: Anna Xambó (CAT/SP), Léa Ikkache (FR), Jason Freeman (US), Somesh Ganesh (IN), Jyoti Narang (IN), Agneya Kerure (IN) and Takahiko Tsuchiya (JP).

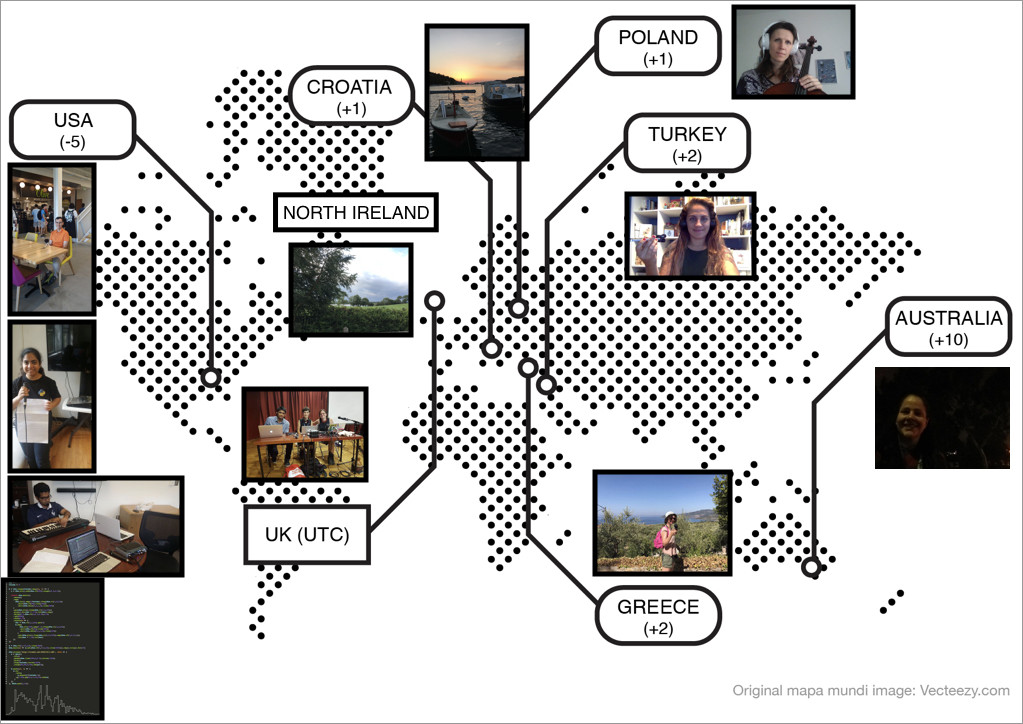

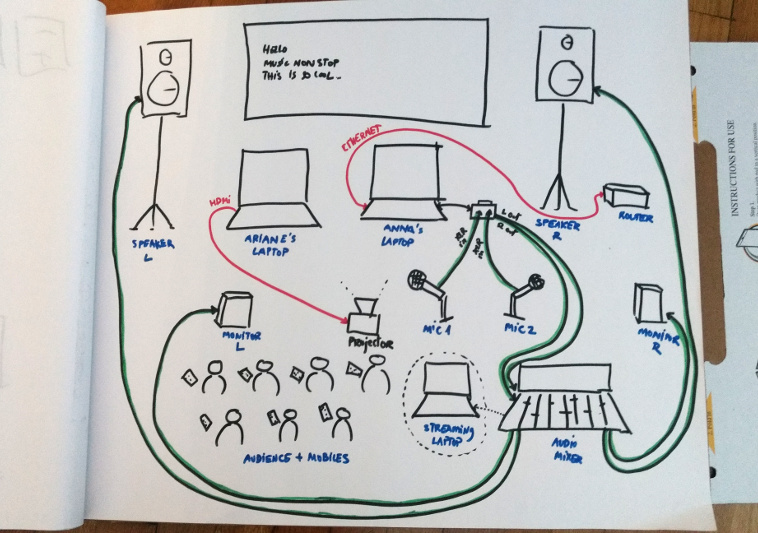

In this performance, fourteen musicians were spread across the globe, with a timezone difference of 15 hours (5 different time zones, in the spirit of Jim Jarmusch’s Night on Earth). We performed with a varied range of instruments, from field recordings, to voice, to acoustic instruments, to digital instruments. Three performers including the author were located in the London venue (see Figure 5). In particular: (1) live voice & the participatory OpenBand web interface (Ariane Stolfi); (2) audio stream back to the distributed musicians (Agneya Kerure); and (3) live mix of the incoming audio streams from Locus Sonus and IceCast using using WACastMix web audio interface (Anna Xambó).

As shown in Figures 3 and 4, the following factors have been relevant:

- geolocation with respect to where the performers are located and what is their timezone;

- space related to a graphical image or collection of images of the venues where the performers are sending audio streams from;

- time with a specific duration for each part of the piece;

- instruments in relation with the timbral and aural characteristics of each instrument.

We have used the geomap for understanding better the others’ spaces and get a unified perspective of our distributed musical experience, which was also shown at the beginning of the performance. We have built and used the score during composition and also performance. However, both images are an ideal extended version of the real performance act: given the large number of musicians, we scheduled long rehearsals where the performers chipped in and out, never joining more than four or five musicians at the same time. In the performance, the supposed instruments for each part were missing and other instruments appeared instead, which is the nature of network music aesthetics. It is an open question how to represent more accurately these serendipitous behaviors beyond the timeline score representation.

3. Hyperconnected Action Painting (HAP)

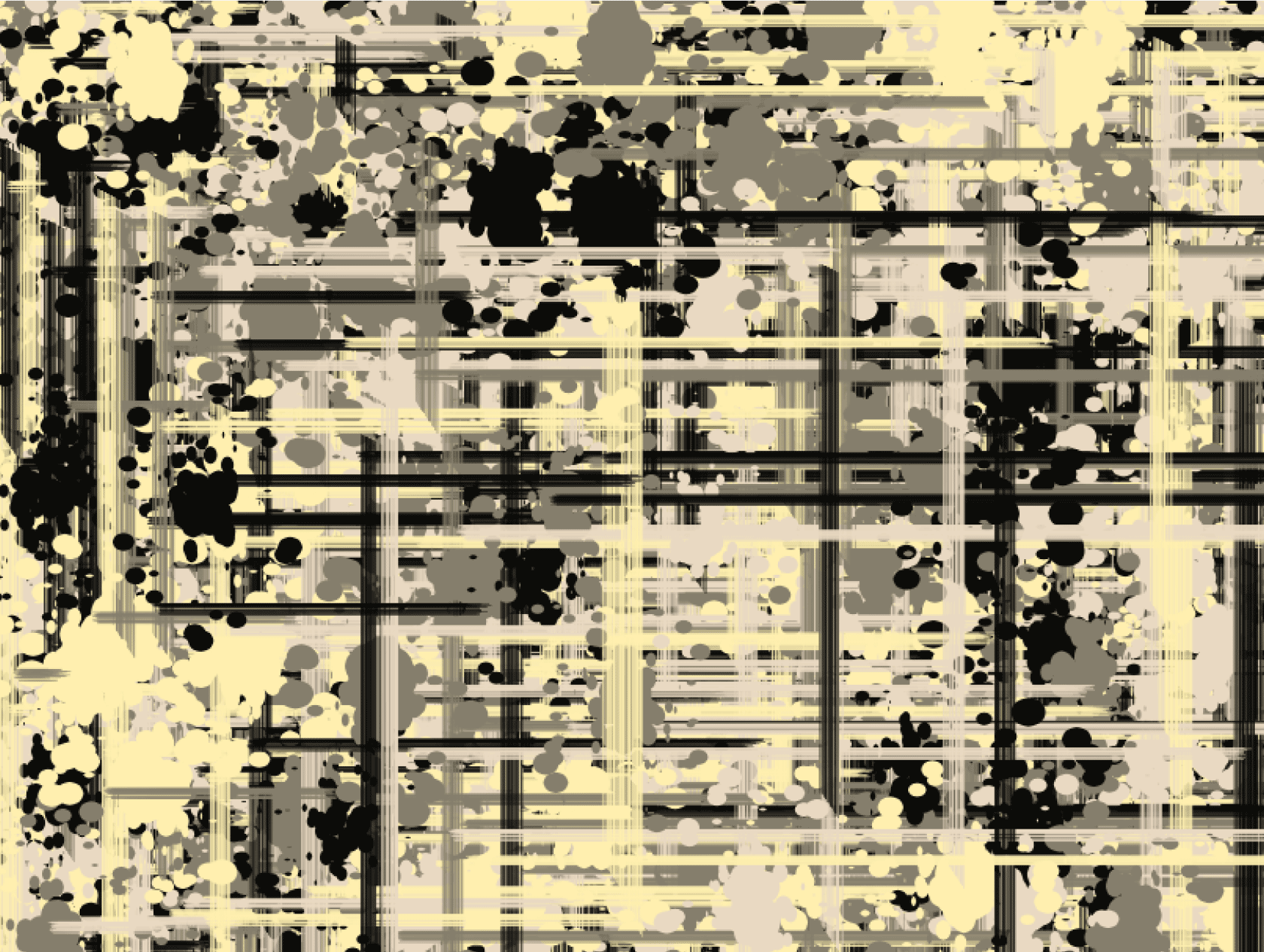

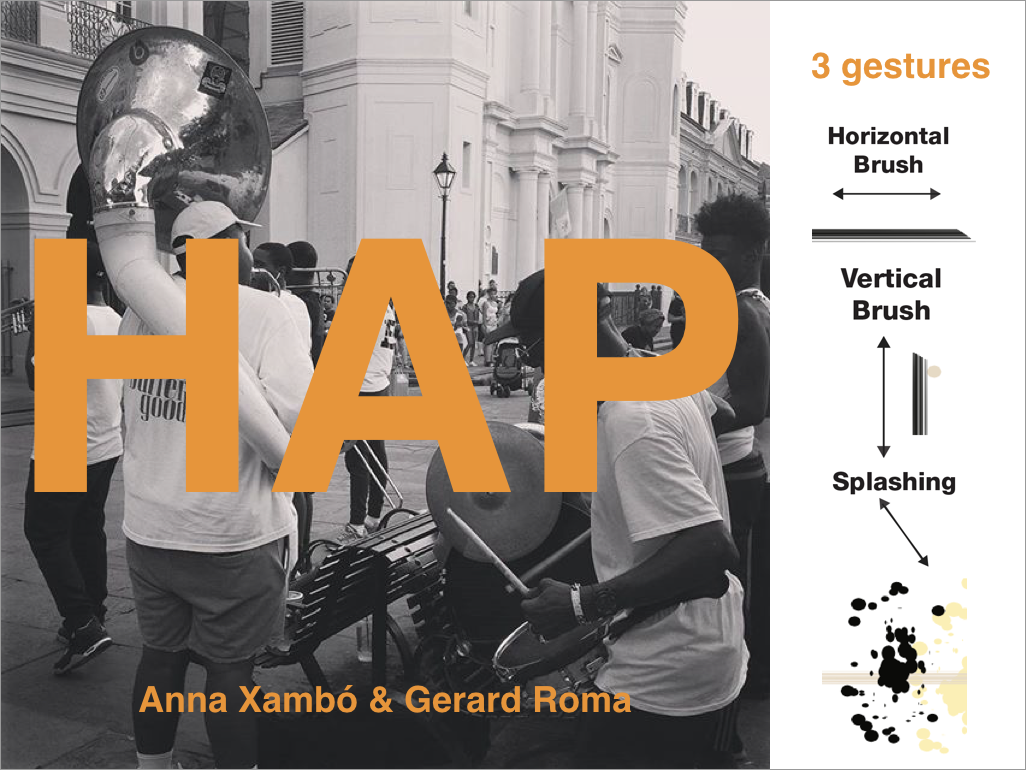

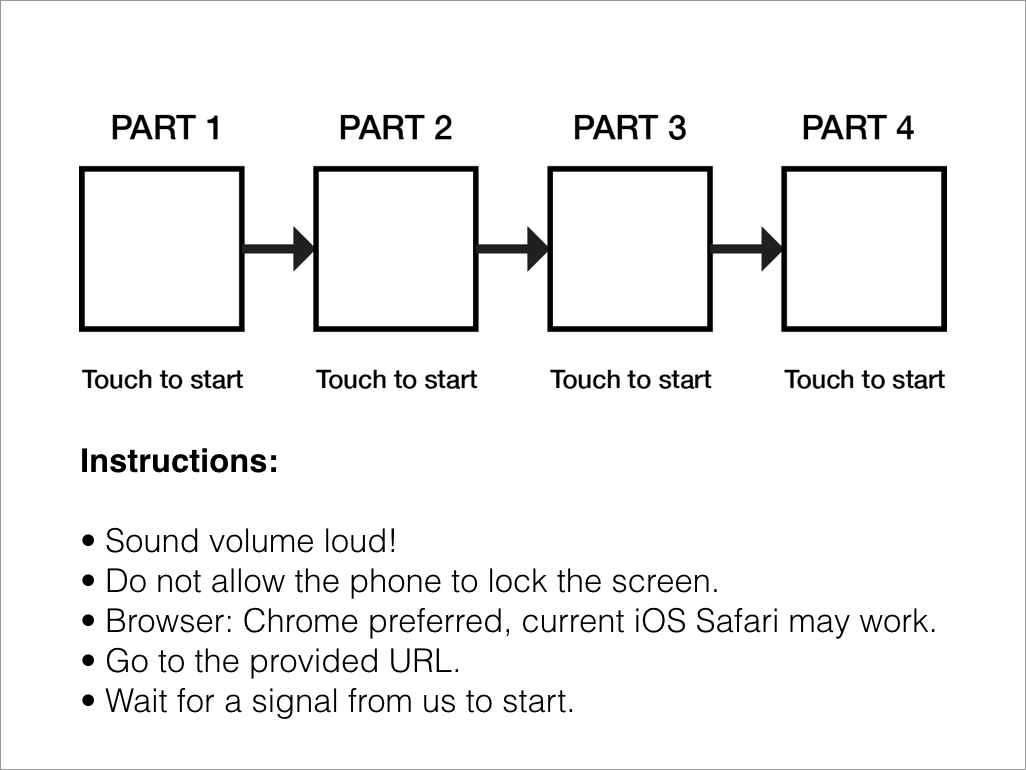

Hyperconnected Action Painting (HAP) is an audience participatory piece by Anna Xambó and Gerard Roma. HAP invites the audience to participate in an immersive experience, inspired by Jackson Pollock’s action painting technique, using their mobile devices. The audience is connected through a web application that recognizes a number of gestures via the mobile accelerometer. The actions of the audience trigger different audio samples influenced by post-jazz aesthetics and are captured on a digital painting (Figure 1). The original audio samples have been recorded from street artists in New Orleans and made publicly available at Freesound.org. The piece has been performed at the Web Audio 2017 conference (Oxford House Theatre. London, UK).

The piece is divided into four sections distinguished by timbre, density, and rhythm. A PA system delivers a general sound stream that is complemented with the audience’s actions. The time for each part of this piece was programmed ahead. We presented the instructions to the audience (see Figures 6 & 7) in the tradition of participatory mobile music. During the performance, there was continuous feedback to the audience’s actions provided by the real-time digital canvas, which was projected on stage.

We have worked with the following main factors:

- rules with respect to what gestures and sounds can be produced and what are the recommended technical requirements;

- time in relation with an absolute time of the piece, with a clear set of parts with pre-defined durations;

- mappings referring to the relationship between gestures and sounds and visuals;

- visual features about the main characteristics of the visual elements; and

- sound features about the main characteristics of the sonic elements.

An important piece of information, when composing and performing, is the distribution and behavior of the audience’s mobile phones, which is unpredictable and is hard to represent in a timeline-based score. Agent-based modeling representations seems a suitable computational model that can inform future iterations.

The Umami Taste

Is a score of a digital music performance, just a guiding tool? Or should it represent the actions, actors and AV features with higher fidelity? It is an open question, as clearly shown in the above “attempts”. We should thoroughly revise the history of aleatoric music, improvisation music, and other similar open forms and their way of approaching structure and content as a system and not a timeline of events. Just to mention a few inspirational examples:

- John Cage (1912-1992)’s indeterminacy as a composing and performance approach;

- Oblique Strategies (1975) by Brian Eno, a deck of cards with short sentences designed to spark creativity among musicians;

- John Zorn’s Cobra (1984) method/system for musical improvisation based on a set of rules.

My impression is that, like with the umami taste, which was discovered much more later than the other tastes (sweetness, sourness, bitterness, saltiness), we are missing a new dimension in the score representation of digital performance. So far, we are moving back and forth from the temporal to the frequency domains, trading off information in each approach. We still need to discover the umami taste in digital performance!

Thank you, Thor, for raising this question. I am looking forward to see the conclusions of your project!

Acknowledgements: The projects presented in this blog post include joint work with Anna Weisling in Beacon, Gerard Roma in Hyperconnected Action Painting, and Nela Brown, Magdalena Chudy, Maria Papadomanolaki, Sonia Wilkie, Tuna Pase, Ariane Stolfi, Franziska Schroeder, Léa Ikkache, Jason Freeman, Somesh Ganesh, Agneya Kerure, Jyoti Narang, and Takahiko Tsuchiya in Transmusicking I.